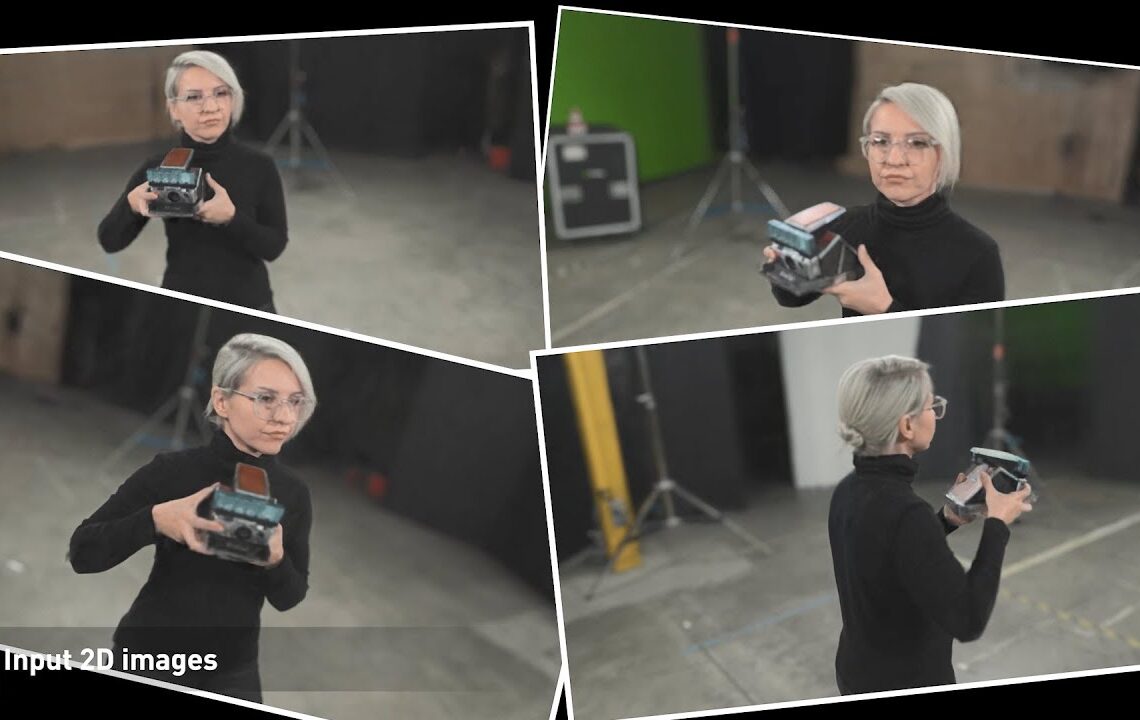

NVIDIA has shared new research that shows an AI algorithm capable of recreating a 3D scene from a small collection of 2D objects in a few seconds using a new technique called neural radiance fields (NeRFs). To reconstruct a scene, a NeRF requires a few dozen images taken from multiple positions as well as the camera position of each of those shots. The AI can then reconstruct the missing sections by predicting the color of light radiating in any direction, from any point in 3D space.

David Luebke, vice president for graphics research at NVIDIA, explains that “if traditional 3D representations like polygonal meshes are akin to vector images, NeRFs are like bitmap images: they densely capture the way light radiates from an object or within a scene, in that sense, Instant NeRF could be as important to 3D as digital cameras and JPEG compression have been to 2D photography — vastly increasing the speed, ease and reach of 3D capture and sharing.”

Find out more on the NVIDIA blog.

Don’t expect to get a useful mesh out of this, it’s not photogrammetry.. very cool though.

But for use in things like backdrops or background items it could be usable.