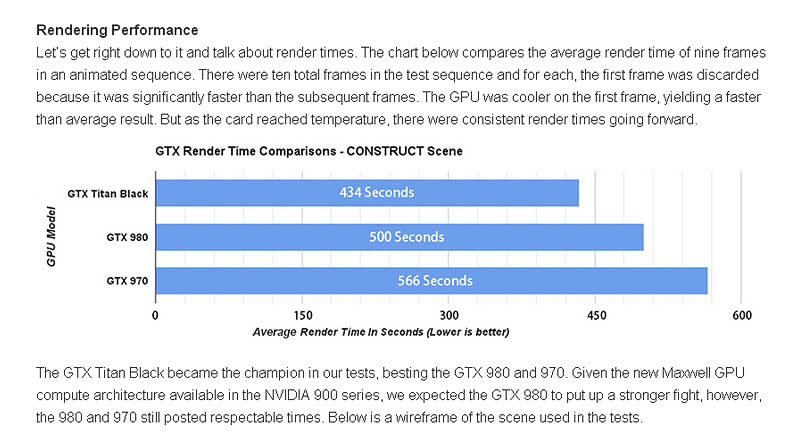

Boxx has made available a comparison of NVidia GeForce cards’ rendering performances. More on Boxx’s blog.

GeForce GTX rendering benchmarks

Boxx has made available a comparison of NVidia GeForce cards’ rendering performances. More on Boxx’s blog.

Related Posts

Recent Comments

- Rawalanche on Blender 5.0 released

- d3d on Autodesk releases 3ds Max 2026.3

- Damm on Autodesk releases 3ds Max 2026.3

- Stephen Lebed on Blender 5.0 released

- d3d on Blender 5.0 released

Popular Stories

CGPress is an independent news website built by and for CG artists. With more than 15 years in the business, we are one of the longest-running CG news organizations in the world. Our news reporting has gathered a reputation for credibility, independent coverage and focus on quality journalism. Our feature articles are known for their in-depth analyses and impact on the CG scene. “5 out of 5 artists recommend it.”

© 2025 CGPress

I know I am not the only one but the last couple of years I’ve been pushing the developers I know to begin utilizing GPUs more and more, but have been met with lots of resistance. The most common argument is “the time it would take to implement would not yield the sales to justify the cost”. I understand that this is the current reality but I hope Nvidia and AMD work with companies focusing on VFX to make this argument go away more and more. It would help everyone from studios to “indies” working on their own small productions.

@Tobbe

Especially when you see them doing these physics tech demos. ILM does this with plume. I would be willing to pay more if that meant I could do production ready fluid sims in minutes – hours instead of hours – days.

We’re sitting here like cavemen simming on double digit cpu’s when we could be simming on quadruple digit cores! Here’s looking at you sitni sati 😉

But on a serious note, it would be awesome if our gpu’s got utilized more in the sim /solve process than the current state of just viewing them.

Most developers can’t move forwards with GPU computing because they’re stuck having to decide between CUDA, which only works on NVidia cards, and OpenCL, which NVidia refuses to support properly. These are two largely incompatible paths, so it’s understandable that many developers are stuck at those crossroads.

AMD and Intel, meanwhile, have both recently moved up to OpenCL 2.0. On supported platforms (ie: all future AMD and Intel devices), that means no more GPU memory limits, no more CPUs sitting idle while the GPU is working, and baseline compatibility from sub-notebooks to workstations & servers.

So really from here forward, if we want to see any progress in this area, CUDA has to die.

Loving my gtx970 with Redshift3d…. absolutely amazing. Max version coming along nicely too.

I can’t wait to try Redshift3D with Max.

GPU multicore certainly seems to be blowing past CPUs for the last few years. I suspect the whole CPU/Ram/GPU/North-South bridge architecture needs to be rethought. More and more the CPU seems like the controller of controllers instead of the powerhouse that it used to be, everything else seems to be doing the heavy lifting.